While using SSIS Script Component, Data Flow Task was in Yellow color for few minutes and finally provided an exception from the Script Component as

at Microsoft.SqlServer.Dts.Runtime.Wrapper.IDTSVariableDispenser100.GetVariables(IDTSVariables100& ppVariables) at Microsoft.SqlServer.Dts.Pipeline.ScriptComponent.LockReadWriteVariables(String readWriteVariables) at Microsoft.SqlServer.Dts.Pipeline.ScriptComponentHost.PostExecute()

I found the reason for this issue at the below link

http://www.sqldev.org/sql-server-integration-services/memory-error-in-ssis-transformation-script-component-4422.shtml

According to that it explains the reason for the above exception and the solution as

"The read-write lock is "granted" in (or before) the PreExecute phase of the Data Flow, and only released AFTER the PostExecute phase. All components' PreExecutes are run, THEN the data flow runs, then ALL the components' PostExecutes are run. SSIS does not run the PostExecute of one component in the flow as soon as the last row moves through it. SSIS does not guarantee the execution order of PostExecute calls to the components in the data flow. In effect, it can't - because it can't guarantee that component A will be completely finished before component B starts, and adding any such guarantee would completely muck up any optimization/parallelism it could attempt.

Therefore - you can not have two Scripts - or even two Row Count transforms (or a combination of the two) in a single Data Flow thatwrite to the same variable. Because in each of those cases, SSIS "reserves" the variable from the time the flow starts until it ends.

In order to account for that, you'll need two (or more) variables - one for each "case" you want to report on."

Business Intelligence, Data Warehousing, OLAP Implementation, Reporting and many more...

Subscribe to:

Post Comments (Atom)

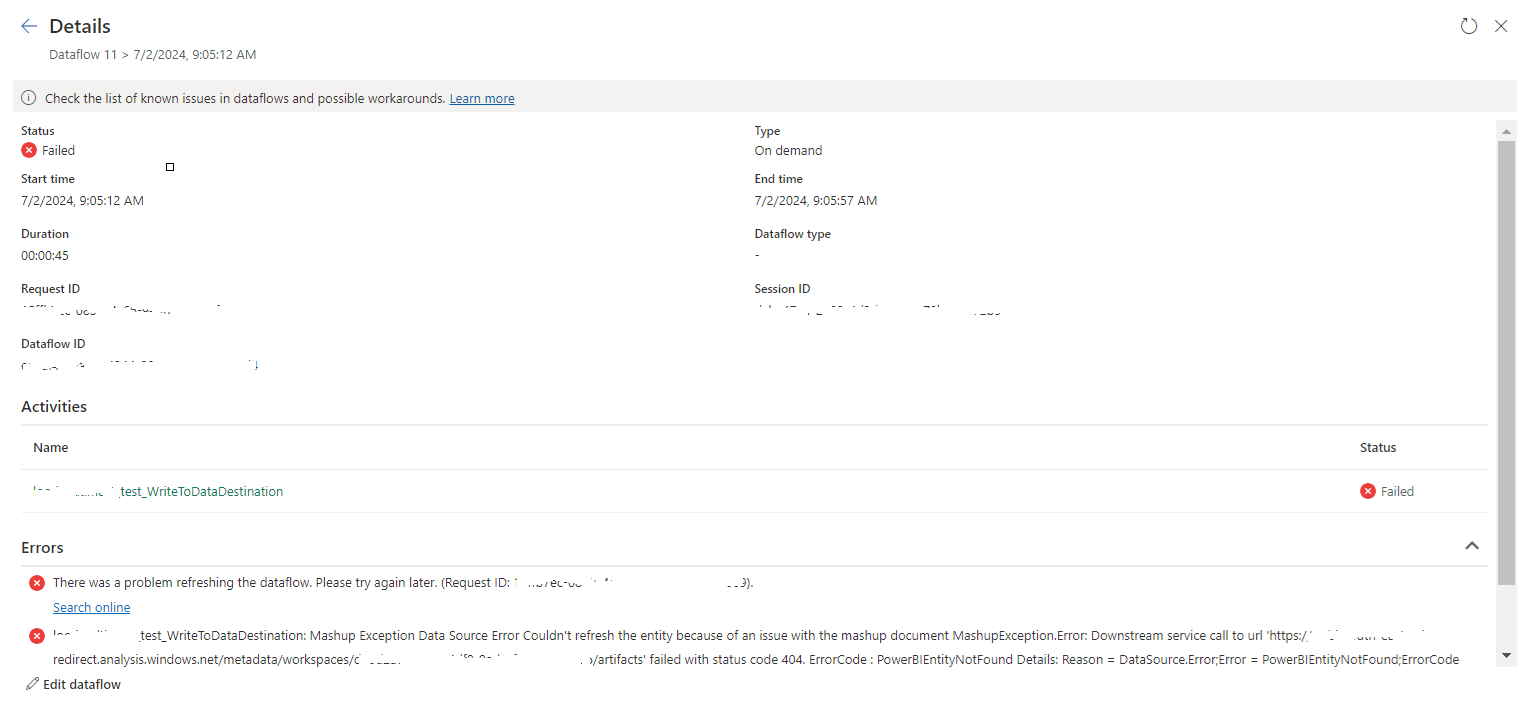

tablename_WriteToDataDestination: Mashup Exception Data Source Error Couldn't refresh the entity...

Once a Dataflow is created and published on Fabric, got the below error while refreshing the Dataflow. tablename_ WriteToDataDestination: M...

-

In SQL Report Subscription, you may want to send the report with the link and you can do it by selecting Include Link value. But some time...

-

In SQL Server Analysis Services Cube, there may be a situation where we need to combine two related fact tables. This kind of a situation ma...

-

When I tried to connect to a SharePoint URL in dashboard designer options, it prompt a message saying "The URL is not available, does n...

This helped me understand my error. thanks!

ReplyDelete